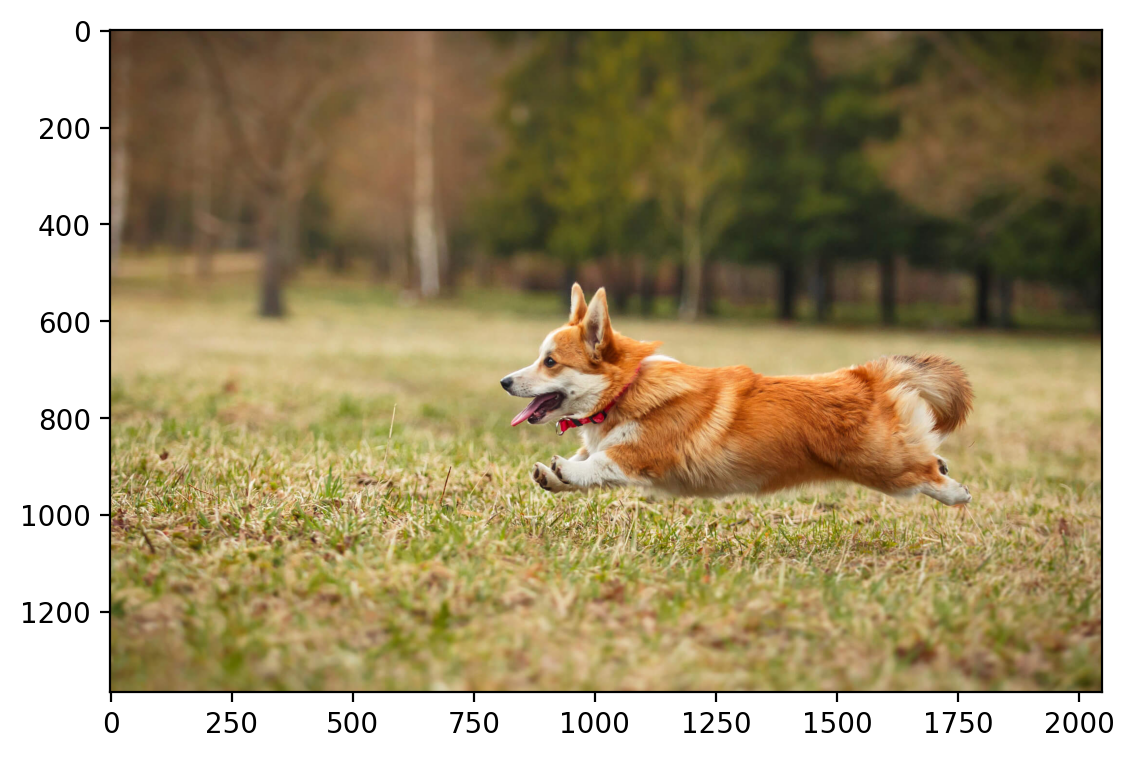

torch.Size([3, 1365, 2048])

tensor([[[0.3098, 0.3137, 0.3137, ..., 0.2941, 0.2941, 0.2980],

[0.3098, 0.3137, 0.3137, ..., 0.2941, 0.2941, 0.2980],

[0.3098, 0.3137, 0.3137, ..., 0.2941, 0.2941, 0.2980],

...,

[0.4745, 0.4745, 0.4784, ..., 0.3804, 0.3765, 0.3765],

[0.4745, 0.4745, 0.4784, ..., 0.3804, 0.3804, 0.3765],

[0.4745, 0.4745, 0.4784, ..., 0.3843, 0.3804, 0.3804]],

[[0.2039, 0.2078, 0.2078, ..., 0.2157, 0.2157, 0.2118],

[0.2039, 0.2078, 0.2078, ..., 0.2157, 0.2157, 0.2118],

[0.2039, 0.2078, 0.2078, ..., 0.2157, 0.2157, 0.2118],

...,

[0.4039, 0.4039, 0.4078, ..., 0.3216, 0.3176, 0.3176],

[0.4039, 0.4039, 0.4078, ..., 0.3216, 0.3216, 0.3176],

[0.4039, 0.4039, 0.4078, ..., 0.3255, 0.3216, 0.3216]],

[[0.1373, 0.1412, 0.1412, ..., 0.1176, 0.1176, 0.1176],

[0.1373, 0.1412, 0.1412, ..., 0.1176, 0.1176, 0.1176],

[0.1373, 0.1412, 0.1412, ..., 0.1176, 0.1176, 0.1176],

...,

[0.1451, 0.1451, 0.1490, ..., 0.1686, 0.1647, 0.1647],

[0.1451, 0.1451, 0.1490, ..., 0.1686, 0.1686, 0.1647],

[0.1451, 0.1451, 0.1490, ..., 0.1725, 0.1686, 0.1686]]],

device='cuda:0', dtype=torch.float64)