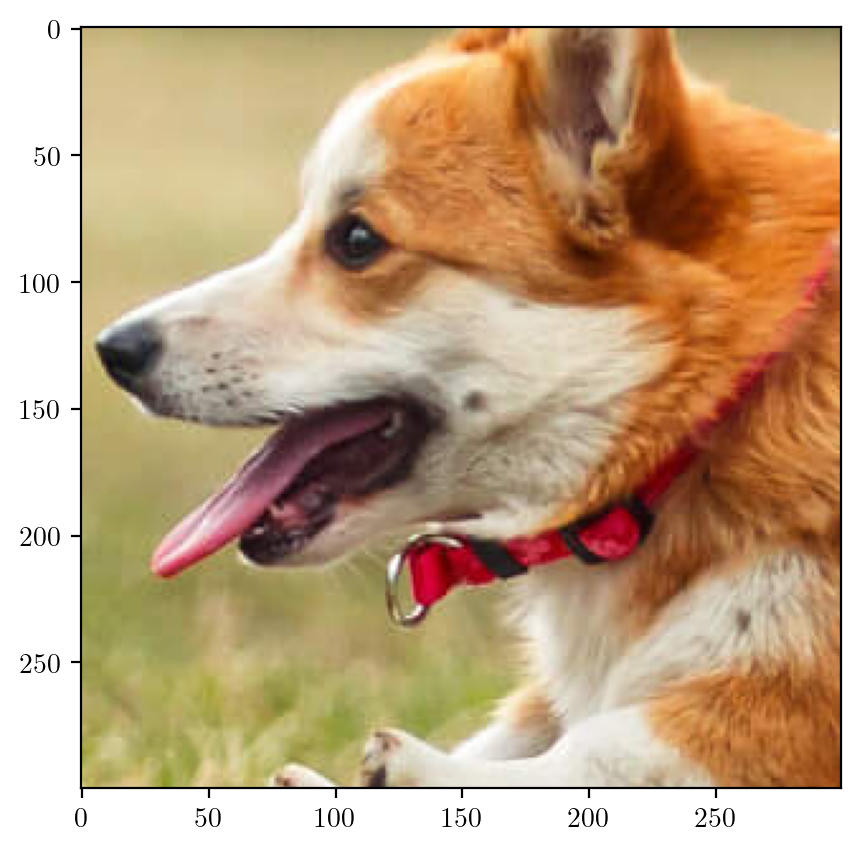

tensor([[[0.7686, 0.7686, 0.7686, ..., 0.5451, 0.5451, 0.5412],

[0.7725, 0.7725, 0.7725, ..., 0.5686, 0.5686, 0.5647],

[0.7765, 0.7765, 0.7765, ..., 0.5882, 0.5843, 0.5843],

...,

[0.7961, 0.7373, 0.7176, ..., 0.5843, 0.5725, 0.5490],

[0.8471, 0.7843, 0.7451, ..., 0.6039, 0.5686, 0.5569],

[0.8784, 0.8196, 0.7686, ..., 0.6275, 0.5804, 0.5686]],

[[0.6941, 0.6941, 0.6941, ..., 0.5137, 0.5137, 0.5098],

[0.6980, 0.6980, 0.6980, ..., 0.5255, 0.5255, 0.5216],

[0.7020, 0.7020, 0.7020, ..., 0.5451, 0.5412, 0.5412],

...,

[0.7686, 0.7098, 0.6863, ..., 0.2824, 0.2706, 0.2431],

[0.8157, 0.7569, 0.7137, ..., 0.3059, 0.2667, 0.2431],

[0.8431, 0.7882, 0.7333, ..., 0.3294, 0.2706, 0.2588]],

[[0.4745, 0.4745, 0.4745, ..., 0.3294, 0.3294, 0.3255],

[0.4784, 0.4784, 0.4784, ..., 0.3451, 0.3451, 0.3412],

[0.4824, 0.4824, 0.4824, ..., 0.3647, 0.3608, 0.3608],

...,

[0.4549, 0.3922, 0.3529, ..., 0.0784, 0.0667, 0.0510],

[0.5216, 0.4510, 0.3961, ..., 0.0784, 0.0471, 0.0353],

[0.5725, 0.5020, 0.4314, ..., 0.0863, 0.0392, 0.0392]]],

device='cuda:0')