import pandas as pd

import matplotlib.pyplot as plt

from latex import latexify, format_axes

import numpy as np

import tsfel

from sklearn.tree import DecisionTreeClassifier

from sklearn.tree import export_graphviz

from sklearn import tree

import graphviz

from sklearn.metrics import classification_report, confusion_matrix, accuracy_score

import seaborn as sns

from MakeDataset import *

%matplotlib inline

# Retina

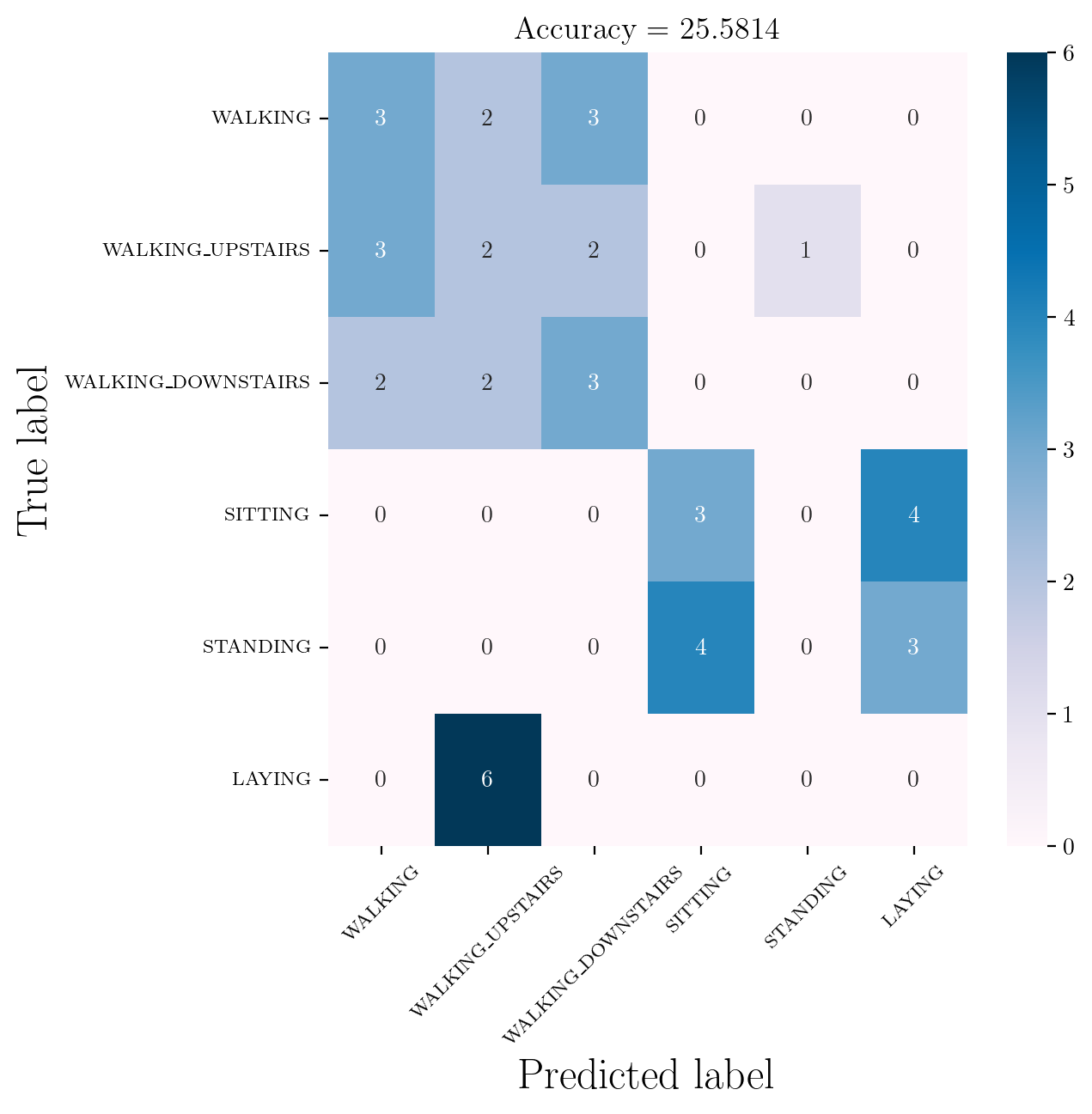

%config InlineBackend.figure_format = 'retina'Training data shape: (108, 500, 3)

Testing data shape: (36, 500, 3)

Validation data shape: (36, 500, 3)